{Updated on 3/28/16 to reflect the name change from Google Webmaster Tools to Google Search Console.}

In late July, Google added Index Status to Google Search Console to help site owners better understand how many pages are indexed on their websites. In addition, Index Status can also help webmasters diagnose indexation problems, which can be caused by redirects, canonicalization issues, duplicate content, or security problems. Until now, many webmasters relied on using less-than-optimal methods for determining true indexation. For example, running site: commands against a domain, subdomain, subdirectory, etc. This was a maddening exercise for many SEOs, since the number shown could radically change (and quickly).

So, Google adding Index Status was a welcome addition to Search Console. That said, I’m getting a lot of questions about what the reports mean, how to analyze the data, and how to diagnose potential indexation problems. So that’s exactly what I’m going to address in this post. I’ll introduce the reports and then explain how to use that data to better understand your site’s indexation. Note, it’s important to understand that Index Status doesn’t necessarily answer questions. Instead, it might raise red flags and prompt more questions. Unfortunately, it won’t tell you where the indexation problems reside on your site. That’s up to you and your team to figure out.

Index Status

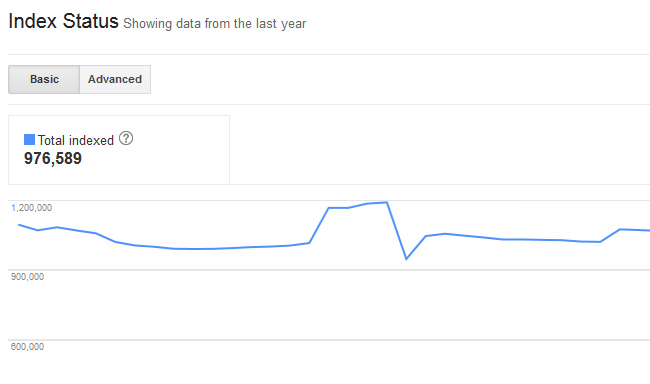

The Index Status reports are under the “Google Index” tab in Google Search Console. The default report (or “Basic” report) will show you a trending graph of total pages indexed for the past year. This report alone can signal potential problems. For most sites, you’ll see normal trending over time. If you are continaully building content, the number should keep increasing. If you are weeding out content, the number might decrease. But overall, most sites shouldn’t see any crazy spikes.

For example, this is a relatively normal indexation graph. The site might have weeded out some older content and then began building new content (which is why you see a slight decrease first, and then it increases down the line):

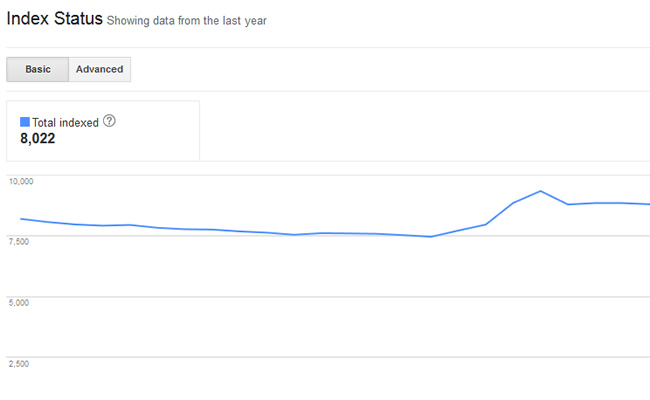

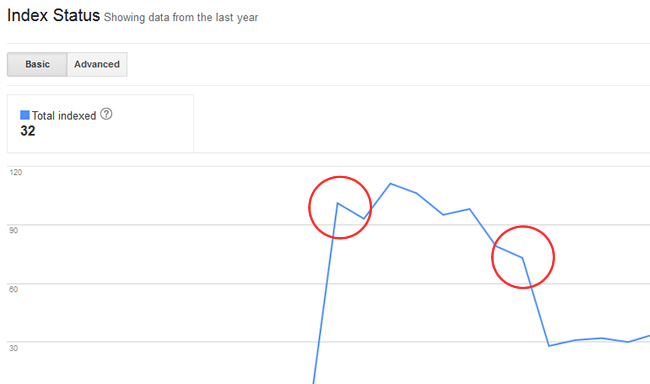

But what about a trending graph that shows spikes and valleys? If you see something like the graph below, it very well could mean you are experiencing indexation issues. Notice how the indexation graph spikes, then drops a few weeks later. There may be legitimate reasons why this is happening, based on changes you made to your site. But, you might have no idea why your indexation is spiking, and would require further site analysis to understand what’s going on. Once again, this is why SEO Audits are so powerful.

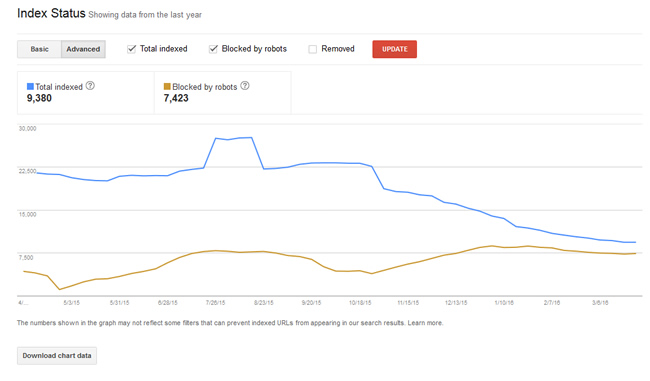

Advanced Report

Now it’s time to dig into the advanced report, which definitely provides more data. When you click the “Advanced” tab, you’ll see two trending lines in the graph. The data includes:

- Total Indexed

- Blocked by Robots

“Total indexed” is the same data we saw in the basic report. “Blocked by robots” is just that, pages that you are choosing to block via robots.txt. Note, those are pages you are hopefully choosing to block… More about that below. Also, you can click “Removed” to see the number of urls that have been removed as a result of a URL removal request. You’ll need to click “Update” to see the new trending line for urls that have been removed.

What You Can Learn From Index Status

When you analyze the advanced report, you might notice some strange trending right off the bat. For example, if you see the number of pages blocked by robots.txt spike, then you know someone added new directives. For example, one of my clients had that number jump from 0 to 20,000+ URL’s in a short period of time. Again, if you want this to happen, then that’s totally fine. But if this surprises you, then you should dig deeper.

Depending on how you structure a robots.txt file, you can easily block important URLs from being crawled and indexed. It would be smart to analyze your robots.txt directives to make sure they are accurate. Speak with your developers to better understand the changes that were made, and why. You never know what you are going to find.

Security Breach

Index Status can also flag potential hacking scenarios. If you notice the number of pages indexed spike or drop significantly, then it could mean that someone (or some bot) is adding or deleting pages from your site. For example, someone might be adding pages to your site that link out a number of other websites delivering malware. Or maybe they are inserting rich anchor text links to other risky sites from newly-created pages on your site. You get the picture.

Again, these reports don’t answer your questions, they prompt you to ask more questions. Take the data and speak with your developers. Find out what has changed on the site, and why. If you are still baffled, then have an SEO audit completed. As you can guess, these reports would be much more useful if the problematic URLs were listed. That would provide actionable data right within the Index Status reports in Google Search Console (GSC). My hope is that Google adds that data some day.

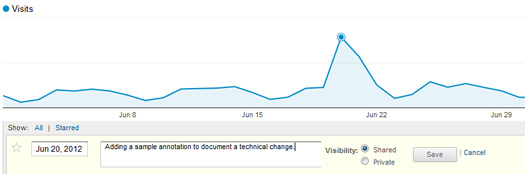

Bonus Tip: Use Annotations to Document Site Changes

For many websites, change is a constant occurrence. If you are rolling out new changes to your site on a regular basis, then you need a good way to document those changes. One way of doing this is by using annotations in Google Analytics. Using annotations, you can add notes for a specific date that are shared across users of the GA view. I use them often when changes are made SEO-wise. Then it’s easier to identify why certain changes in your reporting are happening. So, if you see strange trending in Index Status, then double check your annotations. The answer may be sitting right in Google Analytics. :)

Summary – Analyzing Your Index Status

I think the moral of the story here is that normal trending can indicate strong SEO health. You typically want to see gradual increases in indexation over time. That said, not every site will show that natural increase (as documented above in the screenshots). There may be spikes and valleys as technical changes are made to a website. So, it’s important to analyze the data to better understand the number of pages that are indexed, how many are being blocked by robots.txt, and how many have been removed. What you find might be completely expected, which would be good. But, you might be uncovering a serious issue that’s inhibiting important pages from being crawled and indexed. And that can be a killer SEO-wise.

GG

Thanks for this! Our SEOmoz report has been scaring us with a really high duplicate content number, but this shows that at least Google is indexing our pages.

Thanks Claire. I’m glad you found my post helpful. Although I’d love to see more data in these reports (like specific URL’s), they definitely can help you identify potential issues.

I’ve used the reports heavily since they were released and have found the data to be extremely helpful. I just hope Google expands on the reports in future updates. :)

Great idea, specific URL’s would be so helpful when looking to answer the “why” questions.

Google’s own image had their indexed pages higher than the not selected ones which may have started many people off with an initial concern that there’s a problem – so it was very helpful to see examples in your post of websites where it may actually be okay. Thanks Glenn!

Hey, thanks. I’m glad my post clarified some points for you. Regarding adding specific URL’s, Google really needs to add that data. Some webmasters might misinterpret the reporting and end up making the situation worse. Don’t get me wrong, this is absolutely a step in the right direction. I would just love to see the next phase of the report! :)

Hey Glenn, thanks for this beautiful post.

I do have a large number of pages that fall under “NOT SELECTED” tab than “TOTAL INDEXED” tab. How should I tackle this? Yes, my site is ecommerce.

Hey, thanks. I’m glad you found my post helpful. It really depends on your specific situation. For example, if you have a lot of redirects in place for some reason, then it might make complete sense. Also, it could be how your site is handling pagination, canonicalization, etc.

I’ve seen “not selected” flag some serious canoncial issues recently (during an audit I conducted). It shined a light on the problem, which my client is now fixing. I hope that helps.

my blog http://www.sarkarinokrinews.in/ is 3 month old i use all above tricks but my blog posts take 24 hours in indexing on google search please give me some idia for quick indexing

Gaurav, you can’t guarantee ultra-fast results via any method. How quickly certain posts get indexed is based on a number of factors, including how often your site is getting crawled. 24 hours isn’t too bad considering your site is only 3 months old. :)

I am facing an issue with “Not Selected” and it has baffled me. The problem is on my mobile site (m.domain,com). My regular site is well indexed. But even after following all of Google’s recommendations (Putting Canonical Tags on mobile pages and alternate tags on corresponding desktop pages, submitting mobile sitemap), Google craws the mobile pages but does not index them. Zero pages getting indexed. Not sure what else can I check. There is no redirection on the mobile pages.. Any ideas where to look.

To clarify, are you adding the canonical url tag to your mobile pages that point to your desktop pages? If so, then you are telling Google that that your mobile pages are a copy of your desktop pages (and that it should apply all link metrics to your desktop pages.) This is the correct approach for most companies.

Are you experiencing ranking issues with your pages, or are you just wondering why those pages aren’t getting indexed? Again, the canonical url tag tells Google that page x is the non-canonical version of page y. I hope that helps.

google take mre than week to index my blog ?!!!

i got a simple 19 paged site, no redirects, content simple and succint. having problems with not selected and don’t know how to fix it. No Indexing either, can you advise?

Hard to provide feedback without seeing the site and the reporting. Not provided isn’t necessarily a bad thing, unless it’s a very high count compared to pages indexed. What type of site is it?

Any clue on this one? Webmaster tools says that Google has indexed 23 URLs, yet when I go to Health>Index Status I see no progress. See attached images. When I search “site:example.com” my site is clearly indexed. Not a big deal, but it appears to be hurting my search rankings (I’m not ranking for anything).

CJ, how old is the website? Index Status in GWT could be lagging. Beyond that, without seeing the actual website, it’s hard to say what the problem could be. If you feel comfortable sending the domain name, it would be interesting to check out. Thanks.

Great post and great idea. Love the detail that you go into. I used to use ‘site:mysite.com’ to get all pages and then ‘site:mysite.com/*’ to get indexed and rankable pages until Google removed this function.

I’m looking round for a hack that will give back the same data for competitors so that you can benchmark, any ideas?

Glenn, this is a wonderful post that shined a light on some of my questions. But I still do not know should I worry about a spike in not selected pages on my domain. My main concern is will that spike affect our SEO efforts for our domain (which got hit hard by Panda and Penguin). We are a small limo company and we do all our SEO, website optimization and development in-house, so any advice would be greatly appreciated. Thanks Glenn!

Thanks Sean. I’m glad you found my post helpful.

It’s hard to provide any recommendations without analyzing your site, your index status reporting, etc. There can be natural levels of “not selected” based on the site at hand. But, seeing that level spike when other elements remain flat could be due to serious structural problems. What ratio are you seeing with regard to “not selected” versus indexed?

Hi Glenn, excellent post !! I have a similar problem like CJ – my company’s website (45+ pages) is more than 3 months old and although using ‘site:companyname.com’ is giving me all the pages but my index status at GWT is showing much lesser statistics. (ever crawled = ‘4’ only, Total Indexed = 0). I’m worried about this as, despite being rich in content, I’m also not shwoing up on major keywords anywhere on google search. My website is http://www.whitecapers.com. Would appreciate your help.

Hard to say without digging into the site and cross-referencing your index status reporting. How are your rankings and traffic from Google?

Great post, thanks. I was wondering if anyone could offer help – my index status looks very poor and my blog hasn’t been ranking well recently. Theres an image below, any idea how/what I can do?

Matt, thanks for your comment. For a site with only 55 pages indexed, I think it’s problematic to see “not selected” at 18K+. That could very well be revealing a serious structural problem.

In addition, indexation is actually dropping… I would look to have an SEO technical audit completed. You need to identify what’s going on, and how to fix it. I hope that helps.

Thank you very much for this. I am anyway absolutely lost…our site is not ranking and it was before, I do not know what is going on…I have notice rapid step down in index pages but we also removed pages with different languages as it was causing problems so it might be due to it. Is there some tutorial for girls how to find out what is wrong with my site?

I’m sorry to hear about your drop in indexed pages. It’s hard to say what’s going on without analyzing the site. And since it could be one of many things, there’s really not a tutorial for the analysis.

Are you noticing a steep drop in organic search traffic along with the decline in indexed pages?

Thank you very much Glenn and sunny greetings from Diani! It is very weird, it has drop slightly in search traffic.The problem is that we even do not rank for keywords we want, like Diani beach, Kenya etc. I feel like to cry when I see that google value spam websites which are on the 1st page when I google Diani beach! I have even feeling that since we have installed yoast seo plugin everything got worse:-(

Your best bet is to have someone audit your site from an SEO perspective. It sounds like you have a technical problem that needs to be rectified. Again, it’s hard for me to advocate next steps without analyzing the situation.

Glenn,

So glad to find a resource like this!

After steady climbs of google indexing my blog, it plummeted a few weeks ago from 1574 late Feb, to 1,143 on March 3rd and as of today it’s 1,042.

I use Wordpress and did install a robot txt file to prevent duplicate content issues, cleaning up archives, etc, but that was several months ago.

What has changed though is that I recently did adjust my Wordpress Seo settings (the Yoast plugin) to do 2 things: Redirect attachment URL’s to parent post URl’s and also removed the ?replytocom variables.

I thought doing so, would help with HTML and crawling improvements.

Do you think that could be the culprit, and if so, it’s good that it’s excising duplicate pages? I also ask this because when I click on advance settings, I do not see the “not selected” graph, but all the other’s are showing.

Of am I getting penalized by Panda? I’m just not sure as to why (I’m a tad technically challenged, but try to follow best SEO practices as much as I understand them).

Sorry for the long post, but this has been making me mental and I’ve had no luck getting feedback from the plugin author as of yet.

Thanks for your time!

Michael

Thanks for your comment Michael, and I’m glad my post was helpful. Seeing a drop in indexation after making changes like you did is ok (as long as the right pages are getting removed). :) It’s really hard to diagnose the situation without analyzing your site in detail.

Have you noticed a drop in rankings and organic search traffic during the drop? Also, Google removed “not selected” from webmaster tools a few months ago! I was unhappy to see that removed… Google said it was confusing webmasters. I loved having those numbers and trending.

I had similar problem after steady increase in indexed pages it dropped suddenly. I am not an expert and can’t remember the foolish SEO mistake responsible for this. Only thing I understand is low content and traffic that could have resulted in my blog being labeled as spam. Also my blog ranking was reduced to 1 from PR 2.

My clickhealthtips.com health blog not index in google index. Total index status is 0. How to solve this problem.

I’m seeing 70 pages indexed using a site command for your website. When did you set up and verify your site in Google Webmaster Tools? I believe index status updates ~weekly. Also, do you have both www and non-www set up and verified in GWT?

Simply stated, if it goes up that’s good. If it goes down, it’s not good!

No, that’s not the case. Google may be indexing pages that shouldn’t be indexed (based on a number of issues). And in certain cases, it could be thousands of pages, tens of thousands, or worse. That’s why it’s important to understand how many canonical pages you have, and then reference index status to see how many Google has indexed.

XML sitemap reporting is tied to the sitemaps being submitted to Google. How many urls have been submitted via xml sitemaps and how many does Google show as indexed (in the xml sitemap reporting)?

If index status shows 9 pages indexed, then Google could have found those pages through normal crawls. I hope that helps.

Thanks for awesome article.

Hard to say without checking out the site. Index status in GWT updates approx. weekly. That can throw off the numbers. I hope that helps.

Hi there… not sure if I can get some feedback on this but I would really appreciate it!

On our site we have approximately 2,502 pages indexed via our sitemap.xml but in our WMT Index Status its showing over 4,439 pages

and growing. This may be a silly question but could someone tell me

where these extra pages are coming from? I have the parameters setup,

canonicals set, etc… but the indexed pages seem to keep growing? Any feedback greatly appreciated!!

Thanks!

Isabella, that would concern me. There may be a technical problem that’s creating more urls for the bots to crawl. I’ve seen situations like that get way out of control.

I would crawl your site via Screaming Frog and see what you find. Performing a thorough crawl analysis could help you identify the additional urls getting crawled and indexed. Then you can handle appropriately.

Right now i have 215,821 urls submitted and out of them 88,401 are indexed right now. The number of indexed urls are going down on a regular basis. What can be the problem?

Hard to say without analyzing the site and those urls. Are they unique? Do they all resolve with 200 header response codes? Are they canonicalized? I would make sure you only include canonical urls in your xml sitemaps (pages you want indexed that resolve with 200 response codes). I hope that helps.

Hi, Glenn

Thanks a lot for your answer.

I have checked the following points all are there. But then also my site’s indexed URL is decreasing gradually. Yesterday it was 82000 and today it is 79,235. My site is https://songdew.com/

I usually use GWM crawl section -> sitemaps which says total indexed Urls are 79,235 and total submitted Urls are 216,835.

I am really concerned why the URLs which are indexed are getting de-indexed.

Kindly see if anyone can help me out.

Thanks!

A quick site command shows 171K urls indexed. Is that across subdomains? Each subdomain is separate in GSC, so you need to set each one up. Again, hard to say without having access to the site in GSC and without analyzing it. But again, a site command shows many more pages indexed on your domain than 80K. I hope that helps.